Research

Research

BacToMars

Wellesley College's Human Computer Interaction Lab

Project Brief:

...wip...

My Part in the Project Included:

- Designing, creating, and implemented graphics

- Designing and creating mockups of new user interfaces

Publications:

- O. Shaer, O. Nov, J. Okerlund, M. Balestra, E. Stowell, L. Westendorf, C. Pollalis, L. Westort, J. Davis, M. Ball. BacToMars: Creative Engagement with Bio-Design for Children, Proc. IDC '17.

For more info, including a video, on this ongoing project check out Wellesley College's HCI Lab's TangiBac page.

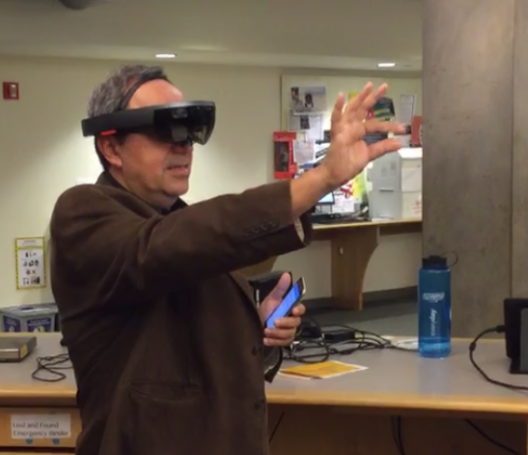

HoloMuse

Wellesley College's Human Computer Interaction Lab

Project Brief:

A collaboration project with the Davis Museum at Wellesley College to create a HoloLens application that allows a user to curate their own exhibit using 3D scanned artifacts from Ancient Greece. The curation includes placing the objects in space and also recording information about each object. Other users can then load the exhibit to view it and can click an object to hear information about it. The aim of this project is to test how this device can change and make the museum experience more informative.

My Part in the Project Included:

- Developed (Unity and C#) backend elements of the augmented reality application including the scaling, rotation, and movement of objects as well as coded the ability for users to remove materials, remove objects from the scene, and hide and display info. In addition, implemented audio commands of different aspects of the coded interactions.

- Presenting this work at Wellesley College conferences.

Publications:

- Fahnbulleh, W. and Davis, J. HoloMuse: Enhancing Engagement with Archaeological Artifacts Through Gesture-Based Interaction with Holograms, Wellesley Ruhlman Conference

For more info, including a video, on this ongoing project check out Wellesley College's HCI Lab's holoSTEAM page.

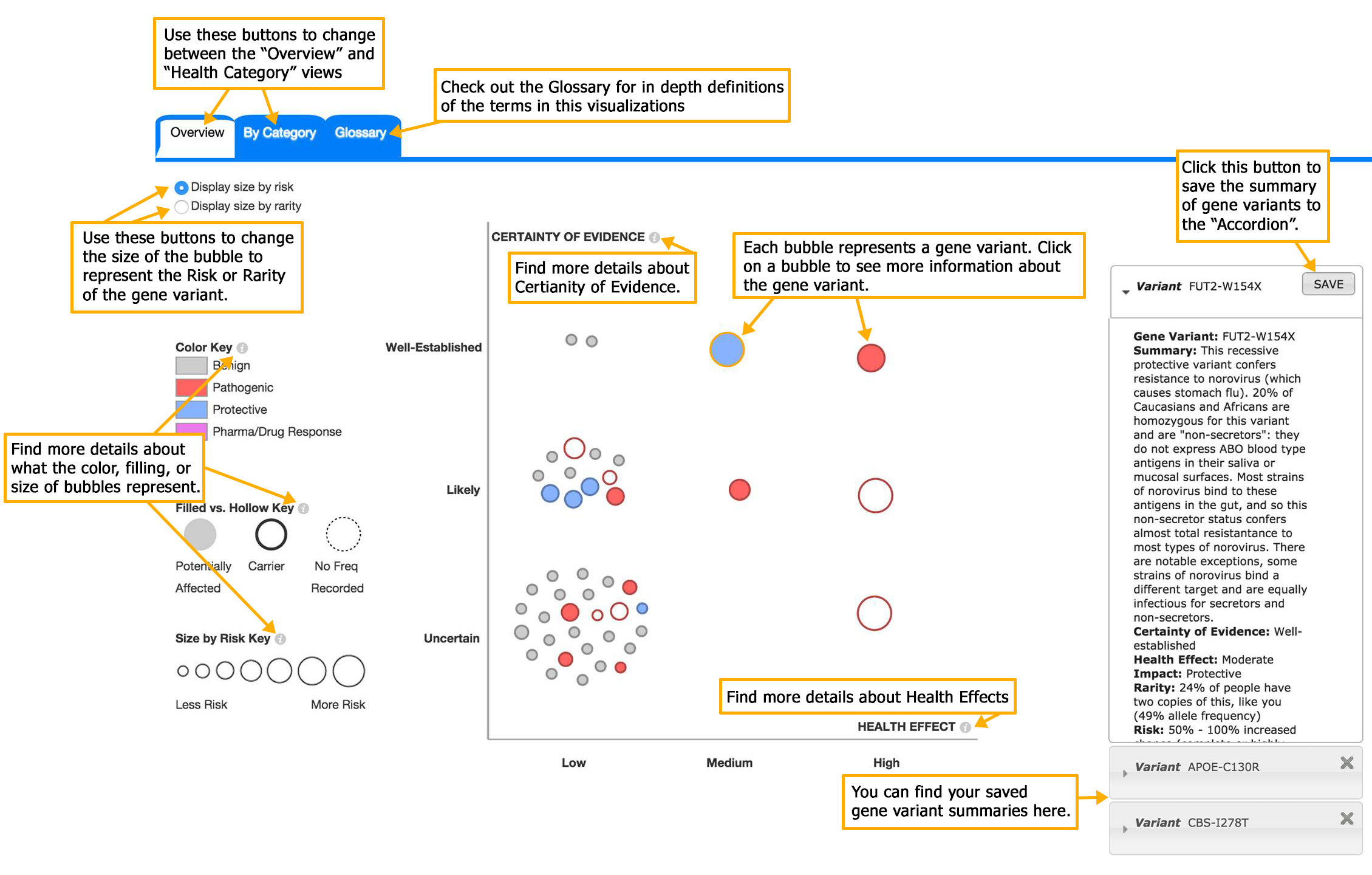

Human-Computer Interaction for Personal Genomics

Wellesley College's Human Computer Interaction Lab

Project Brief:

Questions about how people make sense of and engage with their personal genomic information, and how comfortable they feel about sharing it in order to advance scientific and biomedical research, are not only of paramount importance for society and policy makers, but also a pressing issue for HCI researchers. Funded by the National Science Foundation, and in collaboration with the Harvard Personal Genome Project, we investigate how to design effective interaction techniques to empower nonexpert users to engage with their personal genomic information. We also explore how user interface design interventions in online consent forms can support informed decision and enable users to make decisions that are right for them regarding their personal genomic information.

My Part in the Project Included:

- Coding (CSS, JavaScript, HTML, AJAX, PHP, and SQL) the backend and front end of interactive features (commenting and highlighting) intended to promote community interaction and understanding to an online consent form.

- Consulting on and helping code (HTML, CSS, JavaScript, and AJAX) design and other aspects of the data vizualization for personal genome reports.

- Acting as a beta tester and manager for both portions of the Personal Genomics Project team's work.

- Presenting this work at Wellesley College conferences.

Publications:

- O. Shaer, O. Nov, J. Okerlund, M. Balestra, E. Stowell, L. Westendorf, C. Pollalis, L. Westort, J. Davis, M. Ball. GenomiX: A Novel Interaction Tool for Self-Exploration of Personal Genomic Data, Proc. CHI '16 Computer-Human Interaction.

- Davis, J., Pollalis, C., Westort, L. New Human-Computer Interaction Interventions For Personal Geomics, Wellesley Tanner Conference '15.

For more info, including a video, on this ongoing project check out Wellesley College's HCI Lab's PGHCI page.

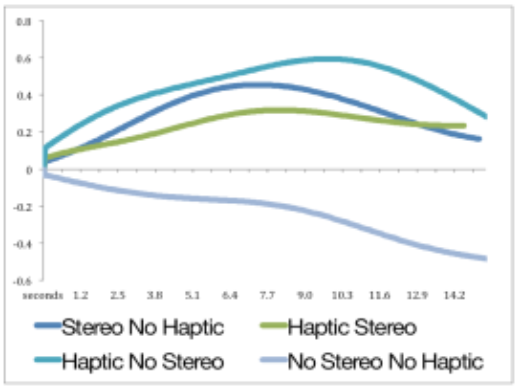

An In-Depth Look at the Benefits of Immersion Cues on Spatial 3D Problem Solving

Wellesley College's Human Computer Interaction Lab

Project Brief:

3D stereoscopic displays for desktop use show promise for augmenting users’ spatial problem solving tasks. These displays have the capacity for different types of immersion cues including binocular parallax, motion parallax, proprioception, and haptics. Such cues can be powerful tools in increasing the realism of the virtual environment by making interactions in the virtual world more similar to interactions in the real non-digital world. However, little work has been done to understand the effects of such immersive cues on users’ understanding of the virtual environment. We present a study in which users solve spatial puzzles with a 3D stereoscopic display under different immersive conditions while we measure their brain workload using fNIRS and ask them subjective workload questions. We conclude that

- stereoscopic display leads to lower task completion time, lower physical effort, and lower frustration

- vibrotactile feedback results in increased perceived immersion and in higher cognitive workload

- increased immersion (which combines stereo vision with vibrotactile feedback) does not result in reduced cognitive workload.

My Part in the Project Included:

- Compiling the puzzles into a format suitable for a study with multiple test groups.

- Coding (C# and JavaScript) a framework for the puzzle related computer aspects of the study.

- Writing the script used by the experimenters when conducting studies, compiled and ran basic analytics on the collected data, and helped to write and edit papers based off the collected data.

- Presenting this work in conferences held in Hawaii and at Wellesley College.

- E. Solovey, J. Okerlund, C. Hoef, J. Davis, O. Shaer Augmenting Spatial Skills with Semi-Immersive Interactive Desktop Displays: Do Immersion Cues Matter?. Augmented Human '15.

- C. Hoef, J. Davis, O.Shaer, E. Solovey An In-Depth Look at the Benefits of Immersion Cues on Spatial 3D Problem Solving. Wellesley Ruhlman Conference '15. SUI’14.

Publications:

For more information check out Wellesley College's HCI Lab's Beyond the Screen page.